List of Past Projects

- AIYun!

- Thesis - Autonomous Animation of Humanoid Robots

- NAO humanoid robots in A*STAR Science50 INNOVATI50N exhibition truck

- Olivia humanoid robot in the opening ceremony of Fusionopolis Two

- CMurfs - RoboCup Standard Platform League (SPL)

- Opportunities for Undergraduate Research in Computer Science (OurCS) - Creating a Multi-Robot Stage Production

- Creative TechNights - Instill Life Into Robots

- 16-899 Principles of Human-Robot Interaction - InterruptBot

AIYun!

I co-founded AIYun!, which offered a product called AI-Spy to help hotel / hostel operators make effective daily pricing decisions. Data is collected on the prices of rooms and occupancy daily for the next N stay dates offered by the operators’ competitors. Data is processed and useful analysis is generated to support pricing decisions to enable the operators to make competitive pricing decisions. I also designed and created the website with AngularJS and SQL to collect information from interested clients.

Thesis - Autonomous Animation of Humanoid Robots

Humanoid robots are modeled to look like humans and have multiple joints, where they can actuate their head, arms and legs. They can also convey meanings of an input signal using their body motions. Examples of an input signal can be a piece of music or speech where the robot gestures to. I choreographed the motions of a NAO storytelling robot, where I had to manually create motions and synchronize the motions to the speech for the class 15-491 CMRoboBits (video below). What I learned from choreographing the NAO storytelling robot is that to manually animate a robot for a input signal, new motions have to be created and synchronized to the input signal. Moreover, we have to ensure that the robot remains stable while executing the motions. In order to animate a new story, I would have to repeat the steps above and create new motions or customize the old motions for the new input signal. Either way, it is very tedious to manually animate new input signals.

A Storytelling Humanoid Robot: Modelling an Engaging Storyteller

After tedious manual animation for a story such as Peter and the Wolf, I worked on finding solutions to autonomously animation a humanoid robot for an input signal. In my thesis, I considered two input signals, music and speech, as examples to illustrate my work. I formulated the problem of autonomous animation into five core challenges, namely: Representation of motions, Mappings between meanings and motions, Selection of relevant motions, Synchronization of motion sequences to input signal and Stability of motion sequences (R-M-S3). I contributed a complete algorithm, comprising my solutions to R-M-S3, to select the best motion sequence that is synchronized to the meanings of the input signal, considers audience preferences of motions and stability of motion sequences. This video below shows my work on the NAO robot autonomously dancing to two pieces of music - Peaceful and Angry - and the NAO autonomously animating a short story. The last part of the video shows 100 sequences generated for the 100 sentences listed in my thesis.

Autonomous Animation of Humanoid Robots

A*STAR Science50 INNOVATI50N exhibition truck

In my thesis, I investigated how to generate dance motions autonomously to a piece of music. I applied my approach to synchronize dance motions for the NAO humanoid robots to a few Singapore National Day songs such as “Stand Up For Singapore” and “Home”. The songs were pre-processed to determine the timings of the words in the lyrics. The NAO robots were put on display on the A*STAR Science50 INNOVATI50N exhibition truck that went around Singapore. Each NAO humanoid robot took turns to perform. The NAOs were termed the “hot favourites of every child and adult visiting the INNOVATI50N Exhibition Truck”.

NAO Dances to Singapore National Day Songs

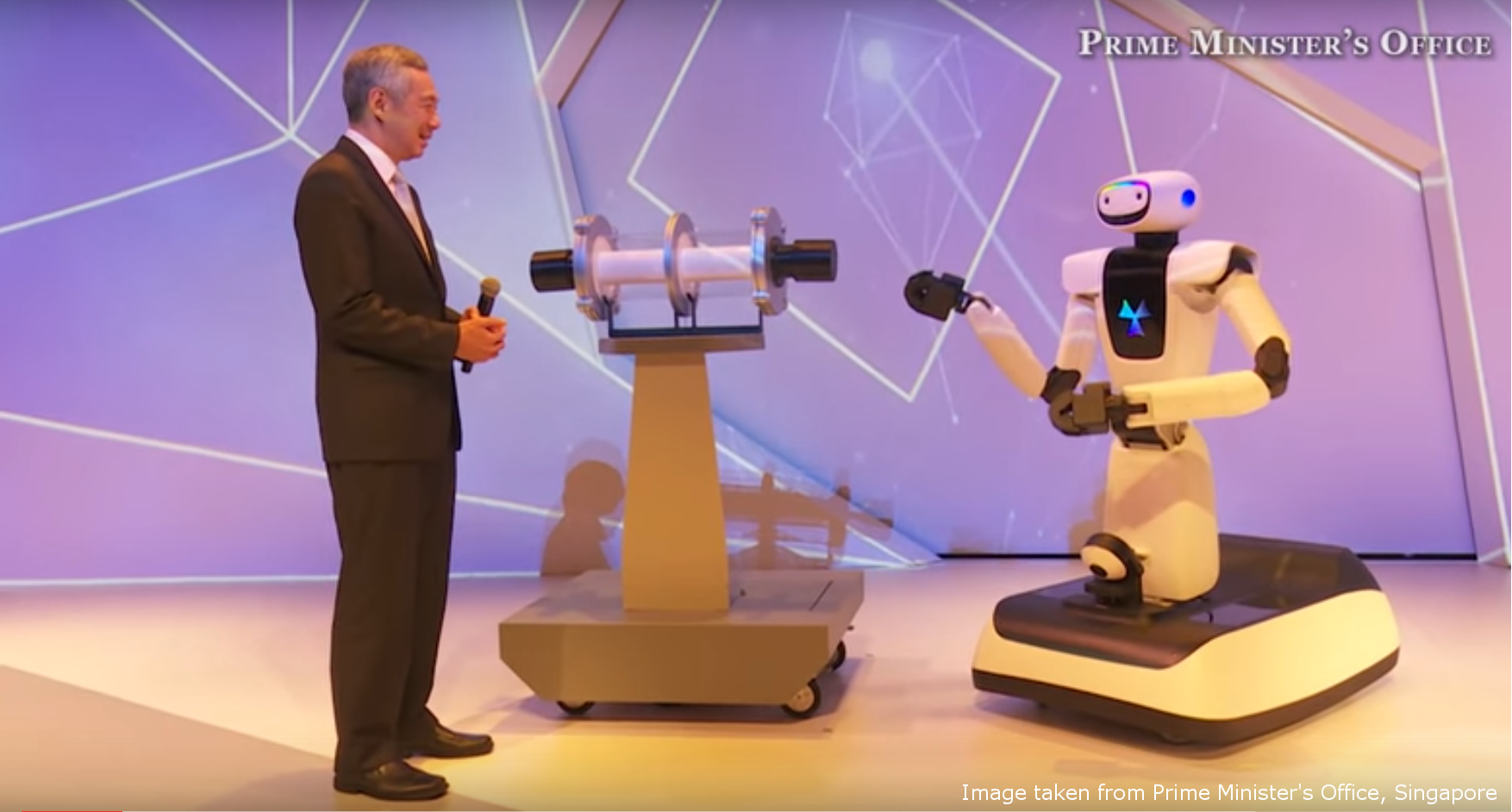

Olivia humanoid robot in the opening ceremony of Fusionopolis Two

On October 19 2015, Olivia, a humanoid robot, was on stage to interact with the Prime Minister of Singapore, Mr Lee Hsien Loong, in the opening ceremony of Fusionopolis Two. I implemented the algorithm from my thesis to generate gestures autonomously based on the mappings between the motions and the word labels in Olivia’s speech. The motions are limited by the safety speeds provided by the engineering team. As multiple gestures are mapped to the same label and the robot can only animate a gesture at one time, I used the concept of preference values to select a gesture to use. The idea of preference values from the audience in my thesis stemmed from this project.

Olivia interacting with Prime Minister of Singapore, Mr Lee Hsien Loong

CMurfs - RoboCup Standard Platform League (SPL)

In December 2008, I joined the RoboCup team in Carnegie Mellon University and started working on the NAO humanoid robots. The RoboCup team was named CMWreagle as we teamed up with the team, WrightEagle, from University of Science and Technology of China. I developed a remote control interface with the motion module and behaviors to enable users to test motions and behaviors on the NAOs.

From 2010 onwards, our team in Carnegie Mellon University, CMurfs (Carnegie Mellon University Robots For Soccer), participated in multiple competitions such as the US Open and RoboCup SPL. In my years with CMurfs, I created a Visualizer GUI to enable us to debug our code, observe the original and segmented camera image real-time and also run logs offline. I created multiple keyframe motions such as a dive (squatting motion) for the goalkeep and a kick-sidway motion. The dive ensures that no ball passes through the legs. At the same time, when the NAO gets up, the ball will be pushed out if the ball gets in between the legs of the NAO, so that the NAO can clear the ball. The kick-sideway motion is designed so that the robot does not have to orbit to kick the ball to the side.

I also designed and implemented various behaviors such as obstacle avoidance, go-to-point, attacker and goalkeeper. After each competition, I thought about how to improve our team and came up with developer time-saving tools, e.g., motion scripting tool to import Choregraphe motions and check that the imported motions contain no errors, deployment tool to deploy to multiple robots simultaneously. These tools help save us considerable time and stress in competitions, especially since we have a limited time to fix bugs during half-time and time-outs.

Our team won multiple awards in the US Open (2nd in 2012, 1st in 2011, 3rd in 2009), and SPL (Top 8 in 2011 and 2009, 4th in 2010). The videos below show highlights or our team's performance in RoboCup SPL and our improvements over the years.

From 2010 onwards, our team in Carnegie Mellon University, CMurfs (Carnegie Mellon University Robots For Soccer), participated in multiple competitions such as the US Open and RoboCup SPL. In my years with CMurfs, I created a Visualizer GUI to enable us to debug our code, observe the original and segmented camera image real-time and also run logs offline. I created multiple keyframe motions such as a dive (squatting motion) for the goalkeep and a kick-sidway motion. The dive ensures that no ball passes through the legs. At the same time, when the NAO gets up, the ball will be pushed out if the ball gets in between the legs of the NAO, so that the NAO can clear the ball. The kick-sideway motion is designed so that the robot does not have to orbit to kick the ball to the side.

I also designed and implemented various behaviors such as obstacle avoidance, go-to-point, attacker and goalkeeper. After each competition, I thought about how to improve our team and came up with developer time-saving tools, e.g., motion scripting tool to import Choregraphe motions and check that the imported motions contain no errors, deployment tool to deploy to multiple robots simultaneously. These tools help save us considerable time and stress in competitions, especially since we have a limited time to fix bugs during half-time and time-outs.

Our team won multiple awards in the US Open (2nd in 2012, 1st in 2011, 3rd in 2009), and SPL (Top 8 in 2011 and 2009, 4th in 2010). The videos below show highlights or our team's performance in RoboCup SPL and our improvements over the years.

RoboCup 2011 SPL

RoboCup 2010 SPL

RoboCup 2009 SPL

Opportunities for Undergraduate Research in Computer Science (OurCS) - Creating a Multi-Robot Stage Production

The School of Computer Science from Carnegie Mellon University organized a three day workshop - Opportunities for Undergraduate Research in Computer Science (OurCS). The participants were given opportunities to participate on computing-related research problems. Prof. Manuela Veloso came up with the idea of a multi-robot stage production using the NAO humanoid robots and the Lego Mindstorms NXT robots. I created tools and the infrastructure to support the creation of a multi-robot stage production that had to be completed within twelve and a half hours. For example, I built upon our CMurfs code architecture and programmed a Puppet Master where communication between robots and a Remote Display Client can be achieved. I also generated sample code to show how a Finite State Machine model can be used. You can read more about the work here. The video below shows snippets of the participants' progress and a eight-minute presentation they gave to explain what they learned and did.

Creating a Multi-Robot Stage Production

Creative TechNights - Instill Life Into Robots

I was a research scholar in the School of Computer Science at Carnegie Mellon University, advised by Prof. Manuela Veloso, and participated in Creative TechNights, an outreach program from Women@SCS that exposes middle school girls to different technologies such as programming and robotics. I was interested in using the NAO humanoid robot as a storytelling robot and designed two activities:

- The participants pose the NAO robot to express Paul Ekman's six basic emotions: happy, sad, angry, fear, surprise and disgust.

- The participants created a story outline and varied the voices for the characters they designed.

Instill Life Into Robots

16-899 Principles of Human-Robot Interaction - InterruptBot

For the class 16-899 Principles of Human-Robot Interaction, we looked at how we can affect a human's willingness to help a robot in distress through the robot's expression of sound. Ben and Pearce built the robot, whereas Somchaya and I worked on the software components - remote control, sounds etc. We have a wiki documenting our work at http://interruptbot.wikia.com/wiki/InterruptBot_Wiki. This video shows InterruptBot in action.

InterruptBot